Picked up a preference for tools I've coded myself, even when they're nowhere near the best choice; it's time to explicitly put some time in learning the great wealth of shared tools out there, and aim a little higher...

Version control is certainly part of "coding well with others", but there's "dumping stuff in chronologically" and the kind of discipline needed to collaborate well. Sometimes the discipline isn't as hard as it looks at first...

I recently joined a tiny fast-moving team that was already using git,

and we're trying to be "as disciplined as we can afford to be" - the

primary external motivator is to keep a stable enough master that

we can safely run demos off of it, but at the same time have those

demos include recent progress. Large chunks of work get done on

branches and reviewed; small things, especially things that impact one

file and are easily tested on their own, can go straight in with less

fanfare.

Problem is that you start one of these small changes, committing as

you go, when you suddently realize it's become a large one and

"probably should have been on a branch all along". Well, if you

haven't git pushed yet (because you're working on master and hoping

you can just push a working change - it's not just luck that this ends

up being true so you can use this trick) you can "retcon" that with

two commands:

# You're already in the checkout, on master.

# Create a local branch of the work...

$ git checkout -b best-intentions

# now master and best-intentions both have your changes.

# "unzip" master back to the push point:

$ git branch -f master $(git rev-parse origin/master)

# now master has been "reconnected" at the point where you last

# pushed; the next pull will update that smoothly...

#

# Bonus points: track the newly created branch properly

$ git push --set-upstream origin best-intentions

That's it, now you can get the new branch reviewed properly.

Most of the branches I use at work are quasi-independent feature

branches, but I'm dipping into various ones at various times to help

out, review, or test... and since our product takes a couple of

minutes to build from scratch, the "one checkout that switches

branches" model doesn't make any sense at all (really it's never

made sense on anything large that I've worked on; for the cases where

it did work, using git stash instead has always worked better) so

I have a bunch of entire source and build trees.

Usually when I start the day I want to get all of them "caught up" to

everyone else's work; taking advantage of the "git extension script"

mechanism, I have a trivial git-morning script that finds all of my

checkouts and just does a git pull in each of them. I have not been

brave enough to have it do a git merge origin/master too, but I'll

probably try the experiment soon just to see if it saves more time

than it wastes from having to do all the cleanup first thing.

Decided recently that using print directly instead of python logging was a minor instance of "coding when I should be using"; likewise daemonizing/restarting code shouldn't be done by hand either, so I finally figured out how to actually use upstart "user configs". (Together they made a good set of things to experiment with while the net was offline due to the northeast blizzard.)

Turns out that both of them were mostly straightforward, except for one impossible/undocumented step. Even on ubuntu, it's impossible to start user scripts explicitly (though you can trigger them on events, which almost counts) because these lines are missing from /etc/dbus-1/system.d/Upstart.conf:

<allow send_destination="com.ubuntu.Upstart"

send_interface="com.ubuntu.Upstart0_6.Job"

send_type="method_call" send_member="Start" />

<allow send_destination="com.ubuntu.Upstart"

send_interface="com.ubuntu.Upstart0_6.Job"

send_type="method_call" send_member="Stop" />

(Similar lines are needed for restart/reload.) I haven't studied the security implications (they don't matter for the machines I'm using this on, but they might matter for your machine) since I haven't checked if these lines are missing from Quantal, and if they are, what bug report I should file. (Filing bug reports instead of kludging around things is another kind of long term vs. short term tradeoff, but that's the subject for a later "codes well with others" post.)

Python logging has a similar "missing chunk of magic". To be fair, if all you're trying to do is code more cleanly, and have easy to use instrumentation for later, logging.basicConfig and changing your print calls to logging.warn with explicit format strings is enough. However, much of what I write should run as services (under upstart, see above) and should actually syslog... and that's supposed to be easy to do with logging. In the past, I've just used syslog directly, and now that I dig into it, I see why: in order to use logging with syslog, you need

syslog_tag = "%s[%d]: " % (os.path.basename(sys.argv[0]), os.getpid())

logging.basicConfig(level=logging.DEBUG)

syshandler = SysLogHandler(address="/dev/log")

sysformatter = logging.Formatter(syslog_tag + "%(message)s")

syshandler.setFormatter(sysformatter)

logging.getLogger().addHandler(syshandler)

Turns out that since SysLogHandler doesn't use openlog (because when it logging was written, the syslog module apparently wasn't threadsafe) so you have to cook up your own process name/pid tagging. Then, even though /dev/log is pretty much the only way to access syslog in the past decade, the default is still UDP to port 514, so you have to override that as well. Once you drop in this 6-line blob, you can use logging in the normal fashion - figuring out a way to folded into one helper that gets pushed upstream would be nice.

(inspired by http://blog.doughellmann.com/2012/12/new-years-python-meme.html who credits http://blog.ziade.org/2012/12/23/new-years-python-meme-2012/ )

ipython-notebook. Of course, it's the second or third time I've "discovered" it, but it's gotten very sophisticated.

Pushed some limits for mock testing on Unix - a python script that creates Subversion and Git repositories and substitutes calls to sendmail, for example. Also spent a lot of quality time with boto...

A couple of patches to kivy and pypi-install - I've been doing a lot more bespoke automation and release engineering that are at the top of a tall pyramid of open source that actually works, so there have been fewer things for me to dive in and debug...

Reddit r/python and Python Planet - and anything that comes by on twitter; I'm not a hugely faithful blog reader this decade, but twitter is a great circulator of ideas...

What are the three top things you want to learn in 2013?

ipython-notebook

more robotics - with an emphasis on actually controlling hardware.

What are the top software, app, or lib you wish someone would write in 2013?

A low-overhead mobile (Android, but Firefox OS would be tempting too...) native python app development environment.

The Free On-line Stanford AI Class didn't have the programming problems that the in-person class did, due to lack of grading resources - but we did get a simple, optional, mini shredder challenge where it was suggested that we use NLP techniques to reassemble 19 vertical (2 character wide) slices of a paragraph back together in the right order. The popular approach was to simply produce some letter statistics (usually bigram) on english words, and then glue stripes together picking the addition that improves the score most.

Most of the approaches discussed afterwards on the aiclass Reddit involved getting a big chunk correct using machine techniques and then fixing up the last chunks by hand; my personal approach found "Claude" and "Shannon" recognizably, leaving very little hand-work. (Note that the actual shredder challenge was won by a team using a hybrid computer-vision/nlp/human approach, so one could argue that this is a state-of-the-art method.)

In my example, I grabbed /usr/share/dict/words and chewed it into statistics by hand; not very sentence like, but by pairing spaces with initial and terminal letters I got good word-assembly out of it. Later, I figured that a good example of "coding well with others" would be to actually use the widely acclaimed NLTK Natural Language Toolkit, especially if that showed that the additional effort of understanding an existing solution would have some measurable benefit towards executing that solution.

The first approach was to replace my dictionary extractor:

ascii_words = [word.lower().strip() for word in file("/usr/share/dict/words")

if word.strip() and not (set(word.strip()) - set(string.letters))]

with code that pulled the words from the Project Gutenberg sample:

import nltk

nltk.download("gutenberg")

ascii_words = nltk.corpus.gutenberg.words()

Then I reran the code (as a benchmark to see how much of a difference having "realistic" words-in-sentences probabilities would make.) Inconveniently (but educationally), it made so much of a difference that the first run produced a perfect reassembly of the 19 slices, with no need for further human work (either on the output data or on the code...) Still, there are a bunch of ad-hoc pieces of code that could be replaced by pre-existing functions - which should in theory be more robust, and perhaps also easier to share with others who are familiar with NLTK without forcing them to unravel the ad-hoc code directly.

A thread on nltk-users points out the master identifier index where one can find a bunch of tools that relate to word-pair bigrams, but also the basic nltk.util.bigrams that we're looking for. In fact, our existing code was a somewhat repetitive use of re.findall:

for ascii_word in ascii_words:

for bi in re.findall("..", ascii_word):

in_word_bigrams[bi] += 1

for bi in re.findall("..", ascii_word[1:]):

in_word_bigrams[bi] += 1

which turns into

for ascii_word in ascii_words:

for bi in nltk.util.bigrams(ascii_word):

in_word_bigrams["".join(bi)] += 1

(in_word_bigrams is a defaultdict(int); the join is necessary to keep this a piecewise-local change - otherwise I'd have to look at the later scoring code which uses substrings and change it to use tuples.)

The use of nltk.util.trigrams is nearly identical, replacing three more re.findall loops.

It turns out we can go another step (perhaps a questionable one) and replace the defaultdict above with nltk.collocations.FreqDist() giving this:

in_word_bigrams = nltk.collocations.FreqDist()

for ascii_word in ascii_words:

for bi in nltk.util.bigrams(ascii_word):

in_word_bigrams.inc("".join(bi))

In practice, most Python developers should be more familiar with defaultdict (those with long term experience might even have a bit of jealousy about it, as it wasn't introduced until Python 2.5.) One benefit of using FreqDist is that it includes a useful diagnostic method, .tabulate, which lets us confirm that the statistics are what we expect from english-language text:

print "In Word Bigrams:"

in_word_bigrams.tabulate(15)

In Word Bigrams:

th he an in er nd re ha ou at en hi on of or

310732 284501 153567 147211 140285 134364 112683 103110 95761 88376 84756 82225 81422 77028 76968

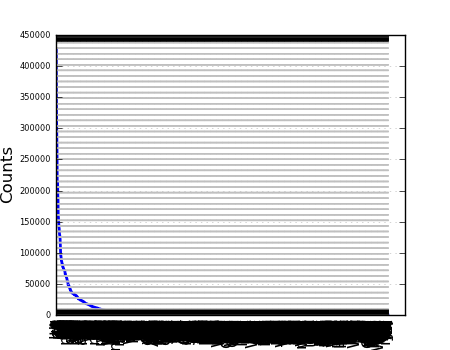

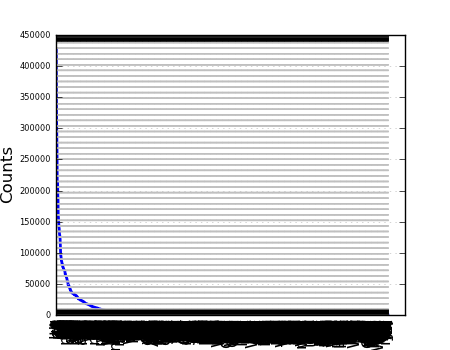

and similarly for the other tables. Even better, adding one more line:

all_bigrams.plot()

uses matplotlib to show us a nice graph that again confirms our suspicion that bigram statistics have a tail, without having to get distracted by the mechanics of doing the plotting ourselves - some past nltk developer decided that frequency distributions were sometimes worth visualizing, and we get to take advantage of that.

Since the actual task involves coming up with the probability that a given partial combination of strips of text is in english, as the completely unscrambled one will be - bigram frequency counts aren't directly useful, we need to normalize them into probabilities. Again, this isn't much Python code:

bi_total_weight = sum(all_bigrams.values())

norm_bigrams = dict(((k, v/bi_total_weight) for k,v in all_bigrams.items()))

but the nltk.probability module has a class that performs this conversion directly:

norm_bigrams_prob = nltk.probability.DictionaryProbDist(all_bigrams, normalize=True)

A quick loop to print out the top ten from both shows that

DictionaryProbDist is implementingThere is an interface difference; instead of dictionary lookup, DictionaryProbDist provides .prob() and .logprob() methods. Nothing else that leaps to the eye as helpful to this particular problem - but it's useful to notice the .generate() method, which is proportional sampling with replacement - which is basically the core of the Particle Filter algorithm.

For the final step, we need to apply our bigram (and trigram) probabilities to our partially assembled bits of text, so that we can greedily pick the combinations that are approaching english the most quickly. However, we have bigrams that appear in the target that don't appear in the model corpus; as explained in Unit 5, Section 21, if you assign those missing values a probability of zero, they falsely dominate the entire result. (If you use the model that way anyhow, it does in fact come up with a poor combination of strips.)

In the original implementation, I assigned an arbitrary and non-rigorous "10% of a single sample" value, which was at least effective:

for i in range(len(word) - 1):

# go back and do the smoothing right!

s *= bigrams.get(word[i:i+2], 0.1/bi_total_weight)

return math.log(s)

It turns out that nltk.probability has some more useful surprises in store - we can simply use LaplaceProbDist directly, since k=1 is sufficient. It turns out that the general form is also called Lidstone smoothing so we could use LidstoneProbDist(gamma=k) if we needed to experiment further with smoothing; in the mean time,

norm_bigrams = nltk.probability.LaplaceProbDist(all_bigrams)

for i in range(len(word) - 1):

s += bigrams.logprob(word[i:i+2])

return s

simply (and convincingly) "multiplies" probabilities (adding logs, just to get numbers that are easier to handle - that is, easier to read in debugging output.)

One interesting result of looking at the logs is that while the chosen strips are typically 2**15 to 2**90 more likely than the second best choice, once we've assembled "Claude Shannon founded" it jumps out that the best next choice (by a factor of "merely" 5) is actually to (incorrectly) add a strip on the left... so at least with the gutenberg corpus, the assemble-to-the-right bias actually helps reach the desired answer, so there's more room to improve the method. The numbers also tell us that it's a path problem, not a result problem - the evaluation function gives a 2**94 higher probability for the correct result than for the (still slightly-scrambled) result that the symmetric (but still greedy) version produces.

While it was certainly faster to code this up in raw Python the first time around - note that this was a single instance problem, with new but thoroughly explained material, where the code itself was not judged and would not be reused. Once you add any level of reuse - such as a second problem set on the same topic, other related NLP work, or even vaguely related probability work - or any level of additional complexity to the original problem, such as a result that couldn't be judged "by eye" - digging in to NLTK pays off rapidly, with dividends like

LaplaceProbDist)tabulate, plot)DictionaryProbDist.generate as relevant to Particle Filters.)LaplaceProbDist, util.bigrams)The last one is perhaps the most relevant benefit, because even though the code may perform identically, and the line counts are the same, the version that uses the NLTK-supplied abstractions should communicate more clearly to the next human reader of the code - an important "coding well with others" principle.